One performance issue i faced at a recent project was with the way that our constraints were being managed, we were using Subsonic as our ORM, and it has a useful tool for generating your ORM code called SubStage – once configured, you can regenerate your DAL code easily based on your database schema, and it can even be integrated into your build as a pre-build event if you want to do this. SubStage also offers the useful feature of being able to generate DDL scripts for your entire database, and can script your data for you too.

The problem came when we decided to use the generate scripts feature to migrate the database onto a test database instance – it turns out that the DDL scripts that it generates include the WITH NOCHECK option, so when we executed them on the test instance, and performed some testing, we found that performance wasn’t as expected.

A constraint can be disabled, enabled but not trusted, or enabled and trusted. When it is disabled, data can be inserted that violates the constraint because it is not being enforced, this is useful for bulk load scenarios where performance is important. So what does it mean to say that a constraint is trusted or not trusted? Well this refers to the SQL Server Query Optimizer, and whether it trusts that the constraint is valid. If it trusts the constraint then it doesn’t check it is valid when executing a query, so the query can be executed much faster.

Here is an example base in this article on TechNet, here we create two tables with a Foreign Key constraint between them, and add a single row to each. We then query the tables:

1DROP TABLE t2 2 DROP TABLE t1 3 GO 4 5 CREATE TABLE

t1(col1 int NOT NULL PRIMARY KEY) 6 CREATE TABLE

t2(col1 int NOT NULL) 7 8 ALTER TABLE t2 WITH CHECK ADD

CONSTRAINT fk_t2_t1 FOREIGN KEY(col1) 9 REFERENCES

t1(col1) 10 11 INSERT INTO t1 VALUES(1) 12 INSERT INTO t2 VALUES(1) 13 GO14

15 SELECT COUNT(*) FROM t2 16 WHERE

EXISTS17(SELECT * 18 FROM t1 19 WHERE t1.col1 = t2.col1)This all works fine, and in this scenario the constraint is enabled and trusted. We can verify this by executing the following SQL to query the ‘is_disabled’ and ‘is_not_trusted’ properties:

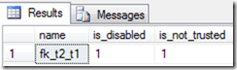

1 select name, is_disabled, is_not_trusted from sys.foreign_keysThis gives the following result:

We can disable the constraint using this SQL:

1 alter table t2 NOCHECK CONSTRAINT fk_t2_t1And when we query the constraints again, we see that the constraint is disabled and not trusted:

So the constraint won’t be enforced and we can insert data into the table t2 that doesn’t match the data in t1, but we don’t want to do this, so we can enable the constraint again using this SQL:

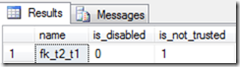

1 alter table t2 CHECK CONSTRAINT fk_t2_t1But when we query the constraints again, we see that the constraint is enabled, but it is still not trusted:

This means that the optimizer will check the constraint each time a query is executed over it, which will impact the performance of the query, and this is definitely not what we want, so we need to make the constraint trusted by the optimizer again.

First we should check that our constraints haven’t been violated, which we can do by running DBCC:

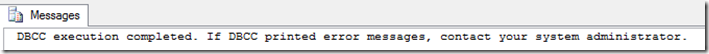

1 DBCC CHECKCONSTRAINTS (t2)Hopefully you see the following message indicating that DBCC completed without finding any violations of your constraint:

Having verified that the constraint was not violated while it was disabled, we can simply execute the following SQL:

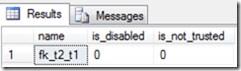

1 alter table t2 WITH CHECK CHECK CONSTRAINT fk_t2_t1At first glance this looks like it must be a typo to have the keyword CHECK repeated twice in succession, but it is the correct syntax and when we query the constraints properties, we find that it is now trusted again:

To fix our specific problem, we created a script that checked all constraints on our tables, using the following syntax:

1 ALTER TABLE t2 WITH CHECK CHECK CONSTRAINT ALLPRINT | POSTED ON MONDAY, JANUARY 31, 2011 5:12 PM